Dotting GenAI: Pixel Art Editor With Generative AI

Posted on:2023-04-11

Category: Machine Learning, Product Design, Web Development

Generative AI is everyday nowadays. Everyone in town are hype about it. However, while generative features such as text-to-image AI are promising, there is one limitation: it is not easy to modify the generated contents as you intended. Especially for text-to-image generation tasks, delivering your intention of how you want to modify an image only through text prompts is a nightmare.

For mass adoption of generative AI, allowing users to easily modify generated image output as they intend to do so is crucial. In fact, in most scenarios, the tools that users want to use for modifying images are changing a color of an area or removing a specific region out of the image. To achieve that goal, generative AI services should be integrated into an editor that provides additional modification tools. At the moment, however, there exists no definitive software design principles on how to merge the two distinct experiences: generating and editing.

To test the methods of integrating Generative AI into editors myself, I created a pixel-art editor with Generative AI features and named it Dotting GenAI. I also used this project to participate in a 2023 Hackathon hosted by the a Korean Venture Capital, Primer with a team composed of 3 developers. Dotting GenAI was awarded as one of the top 16 projects in the hackathon.

Chat Agent for Generating Pixel-art

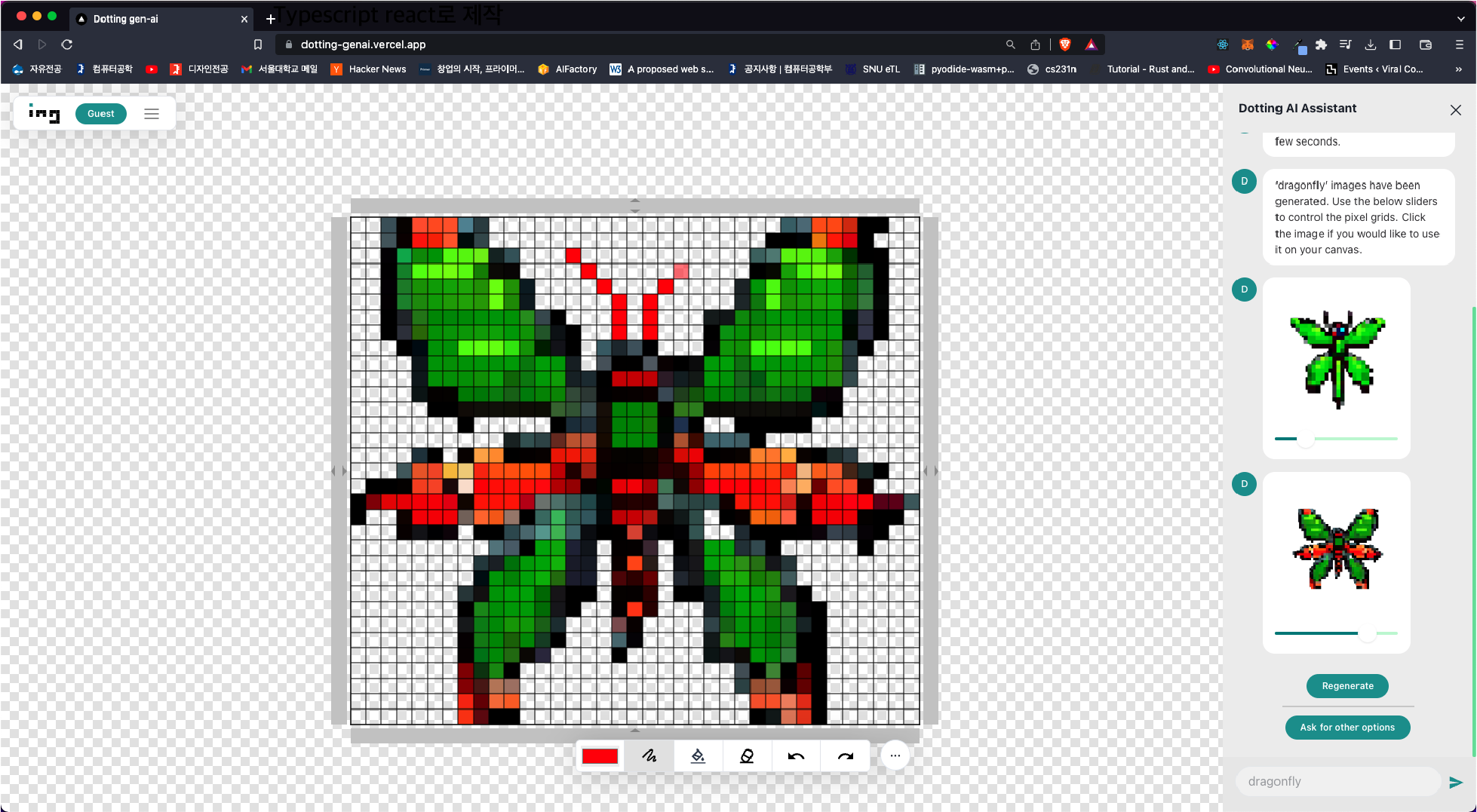

Dotting GenAI allows users to interact with the generative AI through a chat interface positioned on the right of the screen. The user can ask the generative AI to generate an image, and the generative AI will generate an image based on the user's request. The generated images will return as replies in the chat log. The user can place the generated image on the pixel art canvas and then modify the generated image with the editor tools provided by Dotting GenAI.

How the AI was implemented

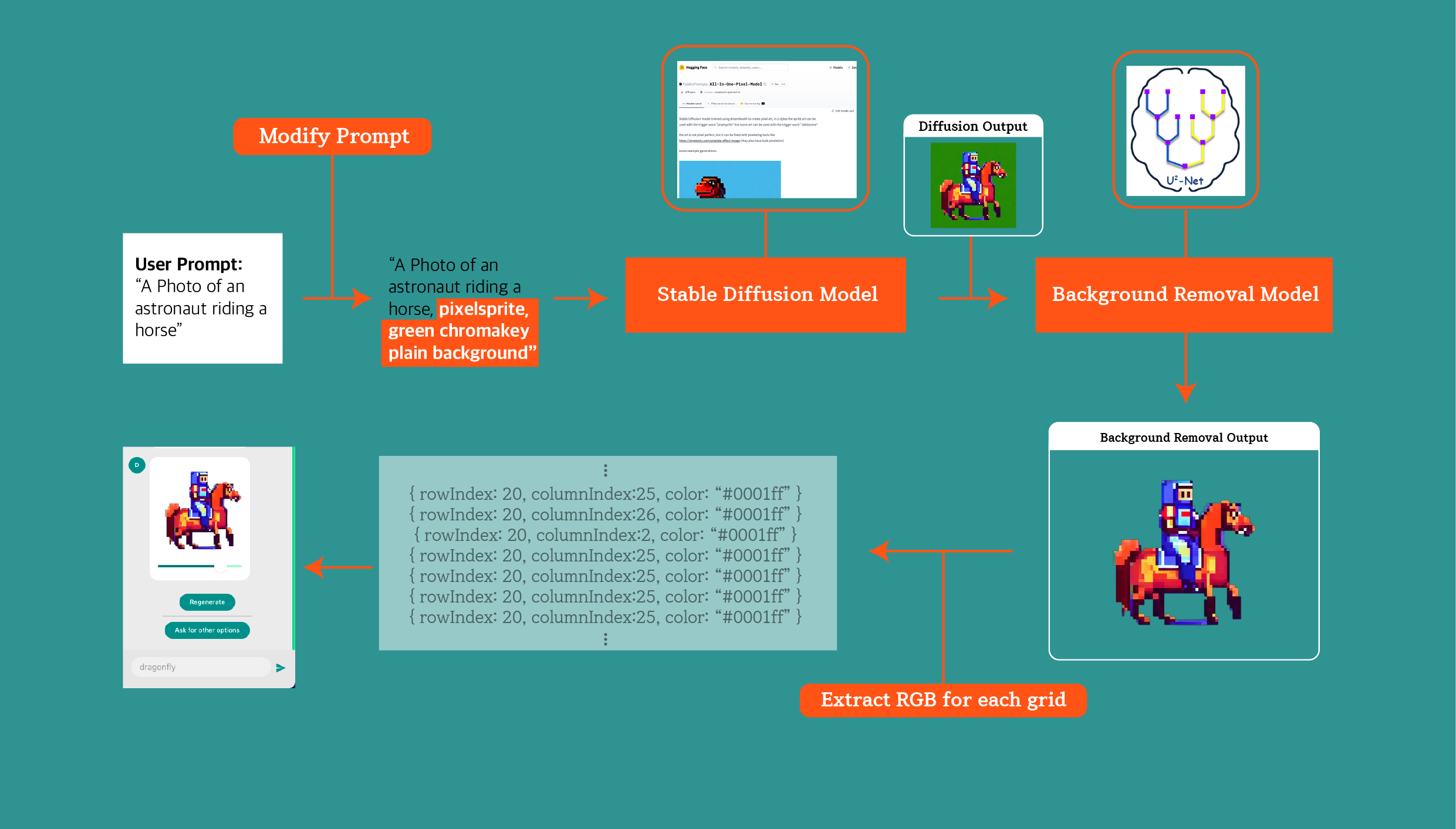

In the backend of Dotting, me and my team members devised a two-stage pipeline for generating pixel art. The first stage used a stable diffusion model to generate a pixel art image based on the user's request. We used a All-In-One-Pixel-Model from hugging face for this task. Since we wanted the model to generate clean pixel-art, we experimented on different prompts, and we found that using a prompt "pixelsprite, green chromakey plain background" helped the model generate a clean pixel-art image.

After images were generated by the stable diffusion model, we used a U2-Net model to remove the background of the generated image. Since we initially generated images with a green chromakey background, the model could easily remove the background.

After the two-stage pipeline, we extracted the rgb value of each grid in the generated image. There were two reasons for this. First, to color the pixels in the editor, we needed the rgb value of each pixel. Second, since the U2-Net model is an encoder-decoder model, there is a possibility that the model might lose its clear-cut pixel-art style. By extracting the rgb value of each pixel, we could ensure that the generated image was pixel-art style.

Frontend editor

The size of the grid for extracting the colors could be controlled in the frontend of Dotting GenAI. This was necessary because the generated image could be of different pixel sizes, and we needed to adjust the grid size to match the pixel size of the generated image.

The basic editor was created with a React component that I have developed for pixel art editing, Dotting. The component allows users to draw with brush tools, fill tools, and color pickers. The editor also allows users to change the grid size of the canvas, allowing users to increase the canvas if they wanted to include more characters in the pixel art.

User Test

After the development of the service, I conducted a user test with people who specialize in desing to figure out 1) whether my software design approach was effective, and 2) whether the generative AI was useful for them. The user test was conducted with 12 design experts, and the results showed some interesting insights.

Most users thought the experience of being able to modify the generated images directly in the editor as comfortable. However, they had many worries about using generative AI in their work. Some reported that although generated images do encourage untried styles, they become a burden when the user wants to create a specific image they have in mind. Some also reported that they rather wanted a generative AI that learns from their own styles, rather than some random generated images based on prompts. People more interested in the study may download the PDF file for more details.